The Illusion of Plug-and-Play AI

Large Language Models (LLMs) are revolutionizing how we interact with data, generating reports, summarizing customer feedback, and even recommending actions.

But when it comes to the deep, granular, structured data that banks hold—like account-level loan repayment histories or credit bureau records—LLMs alone hit a wall.

Why? Because these models aren’t designed to handle vast, raw datasets out of the box. That’s where machine learning (ML) comes in. ML transforms complex structured data into formats that LLMs can interpret—so the insights you’re expecting actually emerge.

Why LLMs Struggle with Banking’s Granular Data

LLMs excel at interpreting natural language and summarizing unstructured data like call transcripts or support emails. But hand them 10 million rows of loan data, and they’ll flounder.

- LLMs can’t “understand” structured data unless it’s already transformed into summaries, patterns, or simplified formats.

- Without preprocessing, the noise in raw data leads to confusion, hallucinations, or incomplete insights.

💬 Example: Asking an LLM, “Why are early delinquencies rising in Segment B?” with raw repayment data won’t yield anything useful. It needs clean trend summaries and feature signals first, which can be produced by ML.

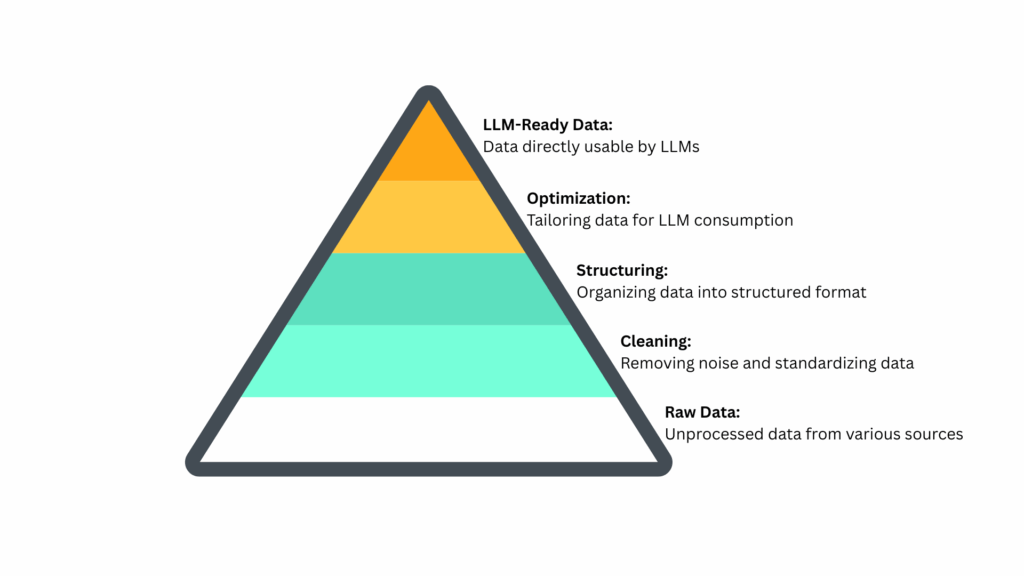

ML as the Preprocessing Engine for LLM Success

ML bridges the gap between data complexity and insight extraction by:

- Cleaning: Identifies and corrects inconsistencies in repayment data (e.g., misreported arrears, duplicate records).

- Aggregating: Rolls up account-level data into portfolio trends (e.g., average days past due by cohort).

- Feature Engineering: Detects variables like “first-payment default risk” or “utilization spikes,” crucial for downstream analysis.

- Pattern Recognition: Flags emerging shifts that the LLM can then explain in natural language.

Only after ML has performed this heavy lifting can LLMs meaningfully respond to prompts like:

“Summarize the drivers of deteriorating credit performance in Q2.”

Data Transformation Pipeline

Strategic Use Case: From Noise to Narrative

Let’s consider a common bank scenario:

You have millions of records across customer segments, repayment behaviors, and bureau attributes.

With ML alone, you can build predictive models.

With LLMs alone, you can write narratives from pre-cleaned data.

But together:

- ML clusters accounts showing repayment stress.

- ML identifies correlations with bureau score drops or economic factors.

- The LLM surfaces these drivers in plain English, e.g.:

“Rising delinquencies are concentrated in Segment C borrowers with recent bureau score declines and higher-than-average utilization.” - This layered approach turns raw operational data into strategic insights.

Business Impact: Why This Matters Now

| Challenge | Solution with ML + LLM |

| Unusable raw data (volume + complexity) | ML distills it into digestible summaries for LLMs |

| Slow insight generation | Automated preprocessing + natural language analysis reduces turnaround time |

| Compliance and risk exposure | ML ensures data quality before insights are acted upon |

| Poor visibility across teams | LLMs create shareable, readable insight narratives for cross-functional use |

Pair Intelligence with Infrastructure

LLMs hold promise, but in banking, they are only as good as the data they’re fed. Banks already have the goldmine of granular loan, credit, and customer data—but without ML-driven preprocessing, this treasure remains buried.

ML and LLMs aren’t competing technologies—they’re a partnership. ML prepares the battlefield; LLMs deliver the strategy.

At BankersLab, our simulation calculation engine handles the pre-processing heavy lifting for us, enabling deep, forward-looking insights.

Ask Us for a Demo of an Agentic RAG LLM in action – analyzing lending portfolio data

Learn More about the partnership between ML and LLMs:

https://www.turing.com/resources/understanding-data-processing-techniques-for-llms

https://ramikrispin.substack.com/p/data-preparation-for-llm-the-key